A cloud GPU, or graphics processing unit, is a specialized hardware component provided over the internet to accelerate tasks that require significant parallel processing power. These tasks are common in areas such as machine learning, deep learning, and high-performance computing (HPC) and media.

Introduction to Cloud GPU Technology

Cloud GPU technology represents a significant advancement in cloud computing, offering powerful computational capabilities to a wide range of applications. A cloud GPU, or graphics processing unit, is a specialized hardware component designed to accelerate tasks that require significant parallel processing power. These tasks are common in areas such as machine learning, deep learning, and high-performance computing (HPC).

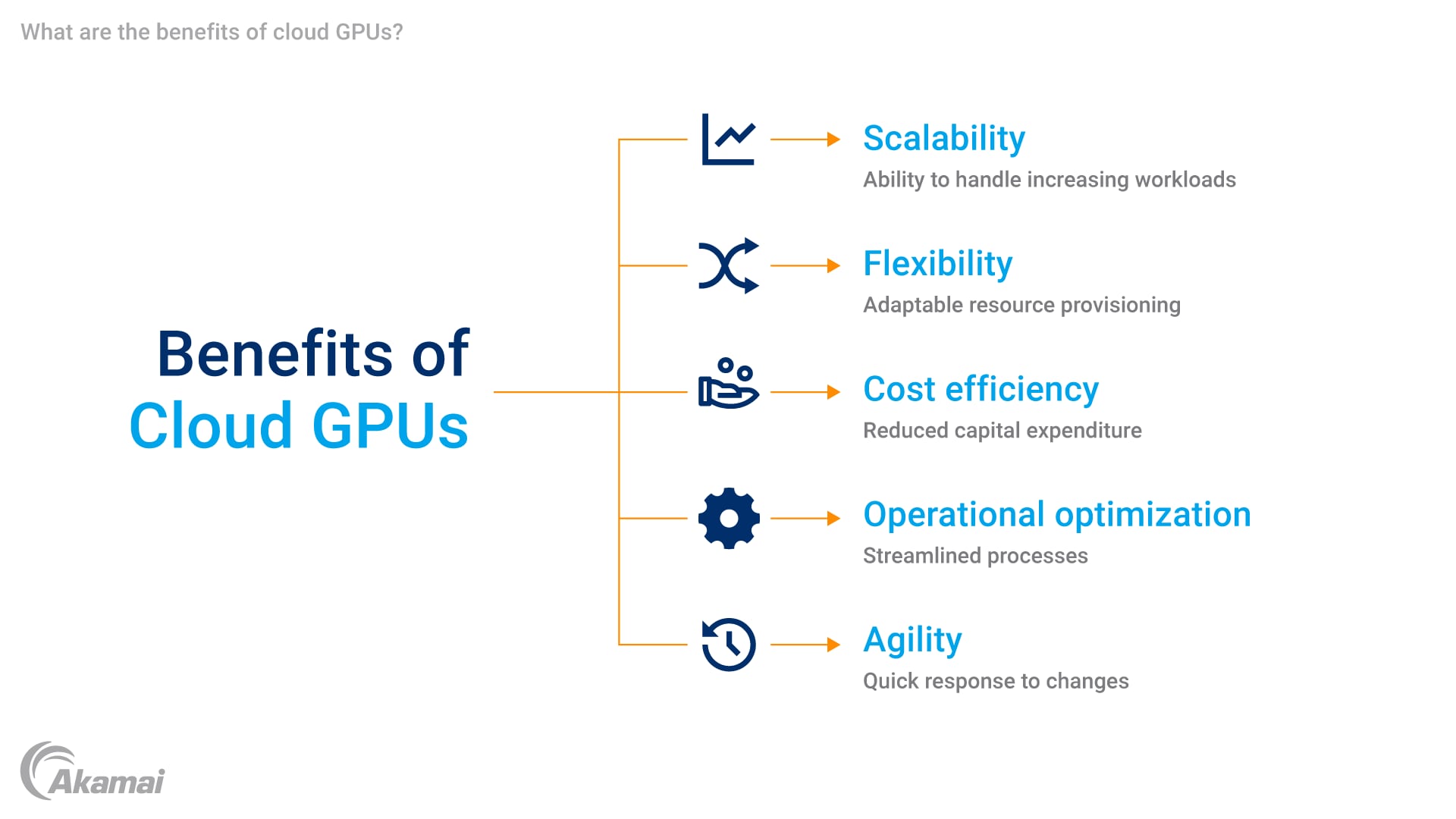

There are many benefits to using cloud GPUs in cloud computing and workloads. They provide scalable and flexible resources that can be provisioned on demand, allowing businesses to handle large-scale simulations, data analytics, AI inference, video processing, and AI training without the need for expensive on-premises hardware. This not only reduces capital expenditure but also enhances operational efficiency and agility.

Understanding cloud GPU platforms

A cloud GPU platform is a service that provides access to GPU resources over the internet. These platforms are designed to integrate seamlessly with popular frameworks like TensorFlow and PyTorch, making it easier for developers and data scientists to leverage the power of GPUs in their workflows. The integration with these frameworks ensures that users can take full advantage of the computational capabilities of GPUs without needing to manage the underlying hardware.

When deploying virtual machines with cloud GPU instances, users can leverage APIs to automate the provisioning and management of these resources. This allows for dynamic scaling and efficient resource utilization, which is crucial for handling variable workloads. The ability to deploy and manage GPU instances through APIs also streamlines the development and deployment processes, enabling faster time to market for artificial intelligence (AI) and machine learning applications.

Enhancing computational power with bare-metal solutions

Bare-metal solutions offer an alternative to virtual-machine-based cloud GPU deployments. These solutions provide dedicated, single-tenant hardware that is optimized for HPC and data analytics. By eliminating the overhead associated with virtualization, bare-metal solutions can deliver superior performance and lower latency, making them ideal for applications that require real-time processing and high computational power.

For organizations that demand the highest levels of performance and security, bare-metal cloud GPU instances are a compelling choice. They offer the flexibility to customize the hardware configuration to meet specific workload requirements, ensuring that computational resources are perfectly aligned with the application’s needs. This level of customization and control is particularly valuable for large-scale simulations and complex AI models that require extensive computational resources.

Cloud GPU use cases and workflows

One of the most prominent use cases for cloud GPUs is in the field of AI and machine learning. Machine learning, deep learning, and AI training are compute-intensive tasks that can benefit significantly from the parallel processing capabilities of GPUs. Cloud GPU platforms enable organizations to train complex AI models more efficiently, reducing the time and cost associated with model development.

Real-world workflows involving AI inference, such as image and video recognition, natural language processing, and recommendation systems, can also be optimized using cloud GPUs. These workflows often require low latency and high-throughput processing, which is where GPUs excel. By leveraging the power of cloud GPUs, businesses can deliver more accurate and responsive AI applications, enhancing user experiences and driving innovation.

While much of the initial AI hype focused on training large models, the reality is that more than 80% of computational demand comes from inference tasks. Organizations are under pressure to deliver on the promises that AI makes, but impulsive investments driven by hype can lead to significant cost overruns. Balancing ambition with efficiency, particularly in inference, is essential. Understanding what “good” looks like is crucial. Organizations need to balance cost with desired outcomes such as low latency, fast inference times, high accuracy, and even sustainability goals. This requires careful planning, optimization, and monitoring throughout the AI model lifecycle.

Industry-specific scenarios

Cloud GPUs aren’t limited to AI and machine learning applications; they are also widely used in HPC and data analytics. HPC workloads, such as computational fluid dynamics, molecular dynamics, and weather forecasting, can benefit from the parallel processing capabilities of GPUs. Cloud GPU platforms provide the necessary computational power to handle these complex simulations, enabling researchers and scientists to achieve faster results and gain deeper insights.

Data analytics is another area where cloud GPUs shine. The ability to process large datasets quickly and efficiently is crucial for businesses that rely on data-driven decision-making. Cloud GPU instances can accelerate data processing tasks, such as data sorting, filtering, and aggregation, making it easier to extract valuable insights from big data. This is particularly important for industries such as finance, healthcare, and retail, where real-time data analysis can provide a competitive edge.

For streaming services and media transcoding applications, developers and operations leaders face their own unique challenges — particularly with direct-to-consumer (DTC) services. Cloud GPUs can help deliver lower latency, increased speed to market, and better performance of applications.

Generative AI and real-time simulations

Generative AI, a subset of artificial intelligence, is another area where cloud GPUs play a crucial role. Generative models, such as generative adversarial networks (GANs), require significant computational resources to train and generate high-quality outputs. Cloud GPU platforms provide the necessary computational power to train these models efficiently, enabling innovators to develop cutting-edge applications in areas such as image synthesis, text generation, and audio processing.

Real-time simulations, such as those used in gaming, virtual reality, and autonomous vehicle testing, also benefit from using cloud GPUs. These simulations require low latency and high-throughput processing to ensure smooth and realistic experiences. By leveraging the parallel processing capabilities of GPUs, cloud providers can deliver the performance needed to support these demanding applications, making it possible to create more immersive and interactive experiences.

Cloud GPU pricing and options

When it comes to cloud GPU pricing, it’s important to understand the different models available. The cost of renting a (lower-cost) NVIDIA RTX™ 6000, for example, can vary depending on the provider and the specific configuration. On-demand pricing is a popular model that allows users to pay only for the resources they use, making it a cost-effective solution for start-ups and small businesses. This model is particularly useful for projects with variable workloads, as it enables users to scale resources up or down as needed.

For larger enterprises, subscription-based pricing models may be more suitable. These models often offer discounted rates for long-term commitments, making them a cost-effective solution for organizations with consistent and predictable workloads. Providers such as Akamai Cloud, Google Cloud, AWS, and Oracle Cloud offer a range of pricing options, including on-demand, reserved, and spot instances, to cater to different budget and performance requirements.

Cost analysis

When comparing the costs of different cloud GPU instances, it’s essential to consider factors such as the type of GPU, the amount of memory, and the bandwidth. For example, the NVIDIA RTX 4000 GPU is a high-performance option that is well suited for deep learning and HPC workloads. The cost of renting high-performance GPU per hour can vary, but it is generally more expensive than lower-end GPUs. However, the performance benefits often justify the higher cost, especially for large-scale simulations and complex AI models.

The B200 (Blackwell) is another advanced GPU that offers even greater computational power and efficiency. While the B200 is more expensive, it can provide significant performance improvements, making it a cost-effective choice for organizations with demanding workloads. When evaluating the cost of cloud GPU instances, it’s important to consider the total cost of ownership, including factors such as power consumption, cooling, and maintenance, which can add to the overall cost of on-premises solutions.

To learn how to reduce your AI inference costs, consider reading this report. It reveals how strategic infrastructure choices can significantly impact the performance and cost of AI, as demonstrated by a case study with Stable Diffusion. Discover how Akamai outperforms hyperscalers by delivering lower-latency, higher throughput, and cost savings that can fuel further innovation.

Pricing models

Cloud providers offer a variety of pricing models to cater to different needs and budgets. On-demand pricing is a flexible model that allows users to pay for GPU resources as they use them. This model is ideal for projects with unpredictable workloads or for organizations that want to avoid long-term commitments. On-demand pricing ensures that users only pay for the resources they consume, making it a cost-effective solution for start-ups and small businesses.

Subscription-based pricing models, on the other hand, offer discounted rates for long-term commitments. These models are suitable for enterprises with consistent and predictable workloads, as they can provide more stable and predictable costs. Providers such as Akamai, AWS, Google Cloud, and Azure offer various subscription options, including reserved instances and savings plans, which can help organizations optimize their cloud spending.

Cost-effective solutions for start-ups and enterprises

For start-ups and small businesses, cost-effective solutions are crucial for staying competitive. Cloud GPU providers offer a range of options to meet the needs of these organizations, including on-demand pricing and low-cost GPU instances. These solutions enable start-ups to access high-performance computing resources without the need for significant up-front investment, allowing them to focus on innovation and growth.

Enterprises, on the other hand, often require more robust and scalable solutions. Cloud providers offer enterprise-grade cloud GPU instances with advanced features such as high-bandwidth memory (HBM), NVLink, and Tensor Cores. These features enhance the performance and efficiency of AI and machine learning workloads, making it possible to handle large-scale simulations and complex data analytics tasks. By leveraging these advanced features, enterprises can achieve faster time to insight and drive business value.

Conclusion

Cloud GPUs represent a powerful tool for organizations looking to enhance their computational capabilities and drive innovation. Whether it’s for AI and machine learning, high-performance computing, or real-time simulations, cloud GPU platforms provide the flexibility, scalability, and performance needed to tackle the most demanding workloads. By choosing the right cloud provider and pricing model, organizations can optimize their cloud spending and achieve their business objectives.

As demand for computational power continues to grow, the role of cloud GPUs will become increasingly important. By leveraging the capabilities of cloud GPU platforms, businesses can stay ahead of the curve and unlock new opportunities for growth and innovation. Whether you’re a start-up looking to develop cutting-edge AI applications or an enterprise seeking to optimize your HPC workflows, cloud GPUs offer a compelling solution that can help you achieve your goals.

Frequently Asked Questions

The key benefits of using cloud GPUs include scalable and flexible resources that can be provisioned on demand, reduced capital expenditure, enhanced operational efficiency, and the ability to handle large-scale simulations, data analytics, and AI training without the need for expensive on-premises hardware.

Cloud GPU platforms are designed to integrate seamlessly with popular frameworks like TensorFlow and PyTorch. This integration ensures that users can take full advantage of the computational capabilities of GPUs without needing to manage the underlying hardware, streamlining the development and deployment processes.

Bare-metal solutions in the context of cloud GPUs provide dedicated, single-tenant hardware that is optimized for high-performance computing (HPC) and data analytics. By eliminating the overhead associated with virtualization, bare-metal solutions can deliver superior performance and lower latency, making them ideal for applications that require real-time processing and high computational power.

Common use cases for cloud GPUs include artificial intelligence (AI) and machine learning, real-time simulations, and high-performance computing (HPC) and media tasks such as computational fluid dynamics, molecular dynamics, and weather forecasting. They are also used in data analytics to process large datasets quickly and efficiently.

Cloud GPUs enhance real-time simulations by providing low latency and high-throughput processing, which is essential for smooth and realistic experiences. This is particularly important for applications such as gaming, virtual reality, and autonomous vehicle testing, where performance and responsiveness are critical.

Cloud providers offer various pricing models for cloud GPUs, including on-demand pricing, subscription-based pricing, reserved instances, and spot instances. On-demand pricing allows users to pay for GPU resources as they use them, while subscription-based models offer discounted rates for long-term commitments.

Choosing the right cloud GPU instance depends on your specific workload requirements, such as the type of GPU, the amount of memory, and the bandwidth. Consider factors like the performance needed for your tasks, the total cost of ownership, and the provider’s pricing models to make an informed decision.

For start-ups and small businesses, cloud GPUs offer cost-effective solutions through on-demand pricing and low-cost GPU instances. These solutions allow start-ups to access high-performance computing resources without significant up-front investment, enabling them to focus on innovation and growth.

Cloud GPUs benefit enterprises by providing enterprise-grade instances with advanced features such as high-bandwidth memory (HBM), NVLink, and Tensor Cores. These features enhance the performance and efficiency of AI and machine learning workloads, enabling enterprises to handle large-scale simulations and complex data analytics tasks more effectively.

Why customers choose Akamai

Akamai is the cybersecurity and cloud computing company that powers and protects business online. Our market-leading security solutions, superior threat intelligence, and global operations team provide defense in depth to safeguard enterprise data and applications everywhere. Akamai’s full-stack cloud computing solutions deliver performance and affordability on the world’s most distributed platform. Global enterprises trust Akamai to provide the industry-leading reliability, scale, and expertise they need to grow their business with confidence.