Apache Hadoop is an open source framework that enables distributed storage and processing of large datasets across clusters of computers. It improves big data analytics by allowing organizations to process massive amounts of structured, semi-structured, and unstructured data efficiently, enabling scalability and cost-effective data management.

Big data analytics is the collection of tools, processes, and applications that businesses use to understand and interpret vast amounts of information. By unlocking insights hidden in complex datasets, big data analytics allows industries, organizations, and governments to make informed business decisions and to innovate faster. In a world where data is generated at an unprecedented rate, harnessing the power of big data analytics has become essential for achieving success and staying competitive.

Definition of big data analytics

Big data analytics is the process of examining large and complex datasets to discover patterns, trends, and insights. These datasets often include raw data gathered from multiple sources, such as social media platforms, Internet of Things (IoT) devices, email, websites, digital imagery, and business transactions. Unlike traditional data analysis methods, big data analytics uses advanced techniques to process data in any form — structured, semi-structured, or unstructured.

By implementing these technologies, organizations can analyze data with unprecedented speed and precision. These advanced analysis techniques, such as data mining, clustering, and regression, enable organizations to analyze vast amounts of information to understand customer behavior, optimize pricing strategies, and identify new market opportunities. Whether it’s forecasting future sales, predicting equipment failures, or improving supply chain efficiency, big data analytics helps businesses stay ahead in a competitive marketplace.

Big data analytics vs. traditional data analytics

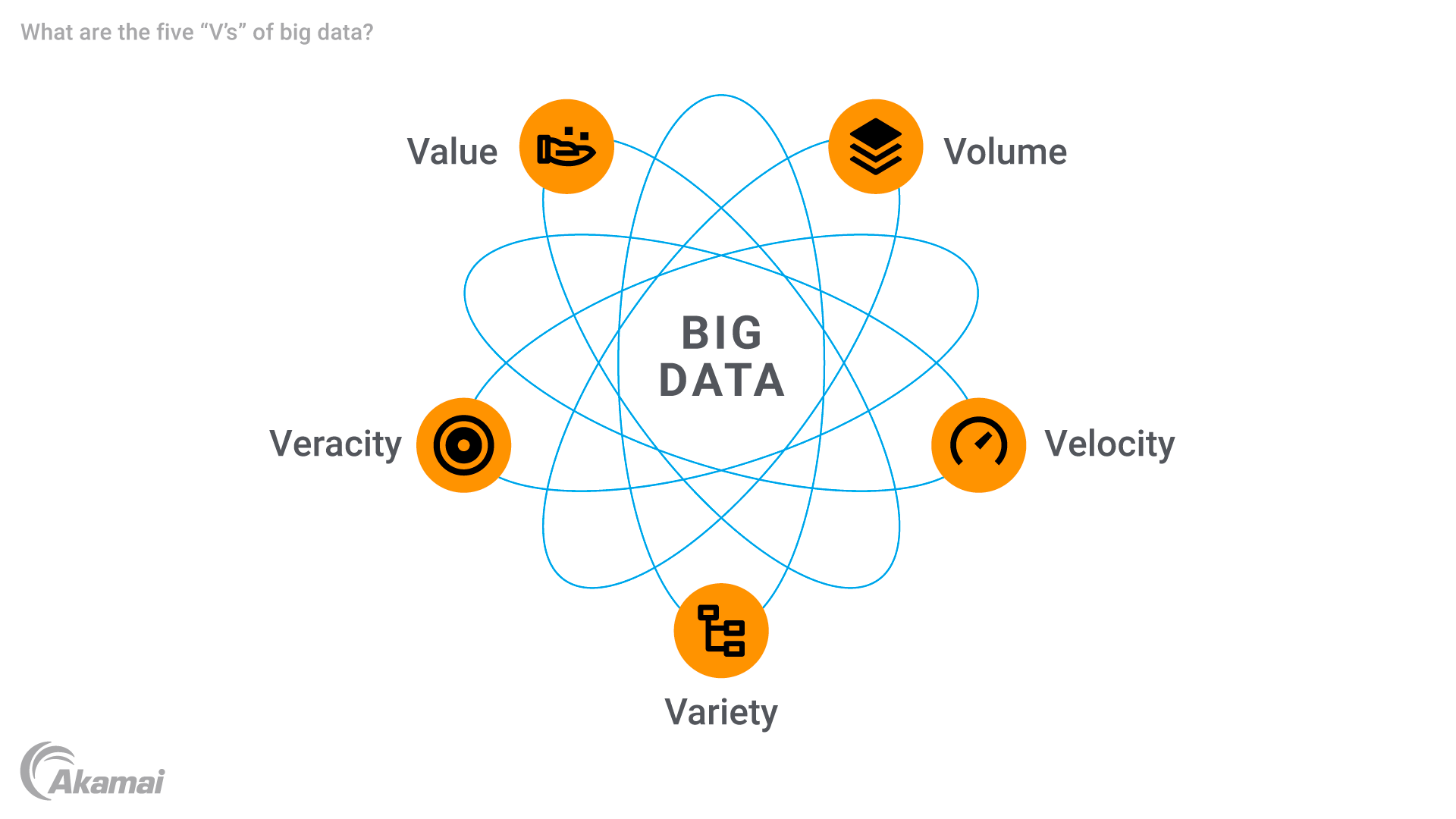

Big data analytics differs significantly from traditional data analytics. Unlike traditional analytics datasets, big data is characterized by five primary attributes, often referred to as the five “V’s.”

- Volume: Big data technologies can process large volumes of data that traditional methods struggle to handle. For example, analyzing thousands of social media posts or real-time IoT sensor data requires specialized tools. Traditional data systems, like relational databases and spreadsheets, are better suited for smaller, well-structured datasets.

- Velocity: Big data systems are designed for streaming data and real-time data processing, enabling organizations to respond to events as they occur. Traditional data systems tend to process data in batches, which is slower and less responsive to the needs of the moment.

- Variety: Big data includes a broader range of data types, including unstructured data like videos, images, and emails, as well as semi-structured data such as XML files. Traditional data, in contrast, primarily focuses on structured data stored in relational tables.

- Veracity: With the massive amounts of raw data collected, ensuring data quality and accuracy is a significant challenge. Inconsistent, incomplete, or inaccurate data can undermine the reliability of predictive analytics and other insights. Veracity highlights the importance of cleaning, validating, and managing data to build trust in the results of analytics.

- Value: The goal of big data is to derive valuable insights that can drive decision-making, optimize operations, and create opportunities. Using big data enables organizations to take raw information and develop actionable outcomes that can improve the customer experience, enhance operational efficiency, or drive innovations in fields like healthcare and retail.

Types of big data

Big data is typically categorized into three main types, each requiring different approaches for analysis and management:

- Structured data: This is data organized in a predefined format, such as tables and rows in a relational database. Examples include customer purchase records or sales data stored in SQL databases. Structured data is easier to analyze using traditional business intelligence tools.

- Unstructured data: This type includes information without a specific structure, such as documents, email, social media posts, videos, or audio recordings. Analyzing unstructured data often requires advanced analytics techniques like natural language processing or image recognition to glean meaningful insights.

- Semi-structured data: Semi-structured data falls somewhere between structured and unstructured formats. It’s data that does not have a fixed schema like structured data but includes organizational markers to separate elements, such as tags or metadata like those found in JSON, XML files, or IoT device logs. This information allows for greater flexibility while still offering some level of structure for easier analysis.

Types of big data analytics

Big data analytics can be divided into four key types, each serving a unique purpose.

- Descriptive analytics: This type focuses on summarizing historical data to understand past trends. For instance, descriptive analytics can track sales performance over a specific period, helping businesses identify strengths and weaknesses.

- Diagnostic analytics: Diagnostic analytics examines the underlying reasons for observable trends. By analyzing correlations within the data, businesses can discover why sales dropped or why customer satisfaction declined during a specific time frame.

- Predictive analytics: Predictive analytics uses machine learning and statistical models to forecast future outcomes. For example, ecommerce companies use predictive modeling to anticipate product demand and optimize inventory levels.

- Prescriptive analytics: This advanced form of analytics provides actionable recommendations based on data insights. Prescriptive analytics helps organizations decide the best course of action to achieve desired outcomes, such as optimizing supply chain operations.

How big data analytics works

Big data analytics follows a systematic process to transform raw data into actionable insights:

- Data collection: This involves gathering large volumes of data from different sources, such as ecommerce platforms, social media, and IoT devices. To enable effective analytics, organizations must store and process large volumes of data efficiently. Raw data is often stored in data lakes or data warehouses.

- Data integration: After collection, data is integrated into a unified system to enable seamless analysis. Data integration tools combine disparate data types from multiple platforms to ensure consistency and accuracy.

- Data processing: Specialized big data technologies like Hadoop and Spark are used to process and organize complex datasets efficiently. These tools enable end-to-end data workflows, ensuring the data is ready for analysis.

- Analyzing data: This stage uses machine learning, predictive modeling, and statistical analysis to uncover trends, patterns, and correlations. For example, predictive analytics can forecast customer behavior, allowing businesses to make better decisions.

- Data visualization: The results of data analysis are presented in easy-to-understand formats like charts, graphs, and dashboards. Tools like Microsoft Power BI and Tableau are commonly used to create compelling visualizations.

Big data analytics tools and technologies

Big data analytics relies on a combination of cutting-edge technologies and tools that enable organizations to process, analyze, and derive insights from large and complex datasets.

- Hadoop and Spark: Apache Hadoop and Apache Spark are open source frameworks designed for managing and processing large volumes of data across distributed systems. Hadoop’s robust storage system, HDFS (Hadoop Distributed File System), and its MapReduce processing model allow organizations to handle big data efficiently. Spark complements Hadoop by processing data in-memory, significantly speeding up iterative tasks like machine learning and real-time analytics, making it ideal for businesses requiring fast insights.

- NoSQL databases: Unlike traditional SQL databases, NoSQL databases like MongoDB and Cassandra are designed to handle unstructured and semi-structured data, such as customer reviews, social media posts, or IoT device logs. These databases provide scalability and flexibility, allowing organizations to manage diverse data types without the constraints of a fixed schema. Their ability to process high volumes of data in real time makes them a critical component of modern big data analytics.

- Cloud computing: Cloud platforms, such as Akamai Cloud, offer scalable storage and processing solutions for big data. These platforms eliminate the need for businesses to invest in costly on-premises infrastructure while providing flexibility to adjust resources based on demand. With integrated tools for data processing and analytics, cloud computing makes implementing big data projects accessible and cost-effective.

- Business intelligence tools: Business intelligence tools like Tableau, Microsoft Power BI, and QlikView enable organizations to visualize data insights through interactive dashboards and reports. These tools help decision-makers understand complex data by presenting it in clear and intuitive formats, such as charts, graphs, and heat maps. By bridging the gap between data analysts and business leaders, these tools enhance data-driven decision-making across industries.

- Data engineering platforms: Tools like Apache Airflow and Dataiku support the end-to-end management of big data workflows, from data ingestion to processing and storage. As part of broader management systems, they help automate data pipelines, ensuring that data is efficiently prepared and integrated for analysis. These platforms are essential for handling large-scale projects that involve multiple data sources and processing frameworks.

- Machine learning libraries and frameworks: Libraries such as TensorFlow, Scikit-learn, and PyTorch provide the building blocks for developing machine learning models. These frameworks enable organizations to implement predictive analytics, automate decision-making, and uncover hidden patterns in their data. Machine learning tools are crucial for deriving advanced insights from big data.

- Data visualization platforms: Advanced visualization tools like Looker and D3.js allow organizations to create custom visual representations of their data. These tools enhance the ability to communicate insights effectively, enabling businesses to identify trends, correlations, and opportunities at a glance.

- Relational and hybrid databases: While NoSQL databases are critical for unstructured data, relational databases like PostgreSQL and hybrid solutions like Google BigQuery still play a key role. They are used for structured data analysis and support SQL queries, ensuring compatibility with traditional business systems.

- Data lakes and data warehouses: Data storage solutions like Snowflake and Amazon Redshift allow organizations to store vast amounts of raw or processed data for analysis. Data lakes are ideal for holding unstructured, and semi-structured data, while data warehouses are optimized for structured data and querying. Both are essential for effective big data management.

The benefits of big data analytics

Big data analytics offers numerous benefits that drive success across industries:

- Improved decision-making: By analyzing large datasets, businesses can uncover insights that lead to smarter decisions. For example, analyzing customer preferences helps companies develop products that meet specific needs.

- Enhanced operational efficiency: Data-driven insights enable organizations to optimize workflows and resource allocation, improving productivity and reducing costs.

- Better customer experience: Businesses can personalize their services based on customer data, creating a more engaging and satisfying user experience.

- Increased innovation: By identifying emerging trends and opportunities, big data analytics fosters product development and market expansion.

How big data analytics is used in the real world

Big data analytics has practical applications in nearly every industry, transforming operations, improving decision-making, and uncovering new opportunities.

- Healthcare: Big data analytics allows healthcare providers to analyze patient data, electronic health records, and real-time monitoring devices to forecast disease outbreaks and improve patient outcomes. For instance, wearable devices collect real-time health metrics, enabling personalized treatments and early diagnosis. Analytics also helps hospitals manage resources efficiently, such as predicting patient admissions and optimizing staff allocation.

- Retail and ecommerce: Companies like Amazon use big data analytics to provide personalized shopping experiences by recommending products based on past purchases and browsing behavior. Predictive analytics helps optimize pricing strategies by analyzing market trends and customer preferences. Retailers also leverage data to improve inventory management, ensuring the right products are available at the right time and location.

- Supply chain management: Big data analytics enhance supply chain efficiency by analyzing large volumes of data to forecast demand, reduce delays, and streamline logistics. Companies can optimize routes for delivery, monitor real-time inventory levels, and predict disruptions before they occur. This not only reduces operational costs but also improves customer satisfaction by ensuring timely deliveries.

- Finance: Financial institutions use big data analytics for fraud detection, identifying unusual transaction patterns in real time to prevent financial losses. Market trends are assessed using historical and real-time data, enabling better investment decisions. Additionally, customer data is analyzed to create personalized banking experiences, such as tailored loan offers or spending insights.

- Manufacturing: In manufacturing, big data analytics powers predictive maintenance by analyzing sensor data from equipment to anticipate failures before they occur. This reduces downtime and extends the life of machinery, saving companies significant costs. Additionally, analytics is used to optimize production processes, ensuring better quality control and efficient use of materials.

- Energy and utilities: Big data analytics helps energy companies monitor usage patterns and predict demand to avoid shortages and optimize supply. It also enables the integration of renewable energy sources by analyzing data from smart grids. Predictive analytics in utilities improve maintenance schedules, reduce power outages, and enhance service reliability.

- Transportation and logistics: The transportation industry leverages big data analytics for route optimization, fuel efficiency, and predictive maintenance of vehicles. Ride-sharing apps like Uber use real-time analytics to match riders with drivers efficiently. Logistics companies use data to improve delivery schedules, track shipments, and forecast transportation needs.

- Education: Educational institutions use big data analytics to analyze student performance and personalize learning experiences. By examining patterns in test scores and participation, educators can identify students who need additional support. Analytics also helps universities optimize administrative functions, such as resource allocation and course planning.

- Agriculture: Big data analytics is revolutionizing agriculture by enabling precision farming, where data from sensors and drones is used to monitor soil conditions, crop health, and weather patterns. This helps farmers optimize irrigation, fertilization, and pest control, leading to higher yields and reduced waste.

- Media and entertainment: Streaming services like Netflix and Spotify use big data analytics to recommend personalized content based on user preferences and viewing habits. Analytics also helps companies predict trends in content consumption, enabling them to create targeted advertising campaigns. Additionally, big data is used to gauge audience engagement and refine production strategies.

- Government and public sector: Governments use big data analytics to improve public services, predict and mitigate natural disasters, and enhance public safety. For instance, analytics is used in urban planning to optimize traffic flow and reduce congestion. Law enforcement agencies analyze data to identify crime patterns and allocate resources effectively.

- Travel and hospitality: Airlines, hotels, and travel agencies use big data analytics to predict customer preferences and offer tailored recommendations. Analytics helps optimize pricing strategies based on demand fluctuations and historical data. In hospitality, customer feedback and preferences are analyzed to enhance guest experiences, from personalized room setups to targeted promotions.

Challenges of big data analytics

Despite its many benefits, big data analytics faces several challenges:

- Data quality: Poor data quality is one of the most significant obstacles in big data analytics. When data is incomplete, inconsistent, or inaccurate, it can lead to flawed insights, which may negatively impact business decisions and operational efficiency. Ensuring data quality requires robust data collection, validation, and cleansing processes, which can be resource-intensive.

- Data integration: Combining data from different sources and formats is a complex process that requires expertise in data engineering. For example, integrating unstructured data from social media with structured data from a relational database can pose technical challenges. Without seamless data integration, organizations risk missing critical insights or duplicating efforts.

- Privacy concerns: The large volumes of personal and sensitive data analyzed in big data initiatives bring significant privacy risks. Organizations must safeguard this information to prevent data breaches and comply with privacy regulations such as GDPR. Failure to address privacy concerns can result in reputational damage and hefty legal penalties.

- Scalability: As organizations generate and store increasing amounts of data, their systems must scale to handle these large datasets. This requires continuous investment in infrastructure, such as cloud computing or data storage systems, which can be expensive and logistically challenging to manage.

- Skill shortages: There’s a growing demand for skilled professionals in data science, data engineering, and data analysis. However, the supply of trained experts hasn’t kept pace with demand, making it difficult for organizations to implement big data projects effectively.

Future trends in big data analytics

The future of big data analytics will be shaped by several key trends that promise to enhance its capabilities and accessibility:

- AI and machine learning integration: Artificial intelligence and machine learning are becoming integral to big data analytics, making data processing smarter and more efficient. Advanced algorithms can analyze vast datasets faster, identify patterns, and predict outcomes with greater accuracy. This trend will also enhance prescriptive analytics, enabling businesses to make proactive decisions based on data insights.

- Edge computing: Edge computing involves processing data closer to its source, such as on IoT devices, rather than relying solely on centralized systems. This reduces latency and improves real-time analytics, making it especially valuable in industries like healthcare, where split-second decisions are crucial. By reducing the need for constant data transfers, edge computing also lowers bandwidth costs and improves system reliability.

- Advanced data visualization: As big data becomes increasingly complex, intuitive and accessible visualization tools will play a critical role. These tools will help nontechnical users understand data insights and apply them to business strategies. Innovations in visualization, such as interactive dashboards and augmented reality interfaces, will make data-driven decision-making more engaging and effective.

- Cloud-based analytics platforms: The continued rise of cloud computing will make big data analytics more accessible to businesses of all sizes. Cloud platforms offer scalable resources, enabling companies to manage large amounts of data without investing heavily in on-premises infrastructure. Cloud-based solutions also promote collaboration by allowing teams to access data and analytics tools from anywhere.

- Ethical data practices: As data analytics grows in influence, there will be a stronger focus on ethical data collection, processing, and usage. Companies will need to address bias in AI algorithms, ensure transparency in data analysis, and develop frameworks for responsible data handling.

Frequently Asked Questions

Why customers choose Akamai

Akamai is the cybersecurity and cloud computing company that powers and protects business online. Our market-leading security solutions, superior threat intelligence, and global operations team provide defense in depth to safeguard enterprise data and applications everywhere. Akamai’s full-stack cloud computing solutions deliver performance and affordability on the world’s most distributed platform. Global enterprises trust Akamai to provide the industry-leading reliability, scale, and expertise they need to grow their business with confidence.